see neural networks, convolution

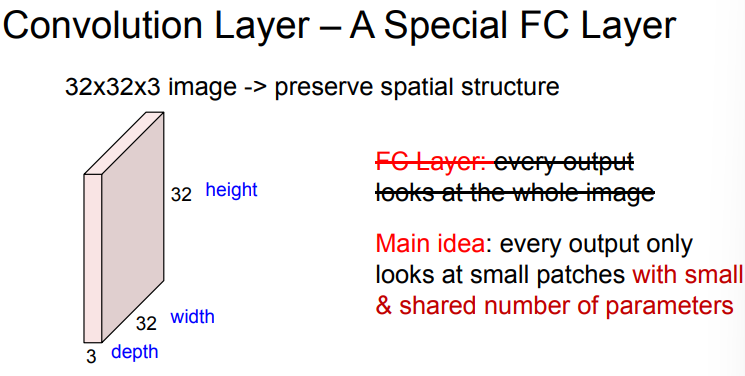

- Local Connectivity: Each output depends only on a small input patch (reduces parameters).

- Parameter Sharing: The same filter scans the entire image (efficient computation).

operations

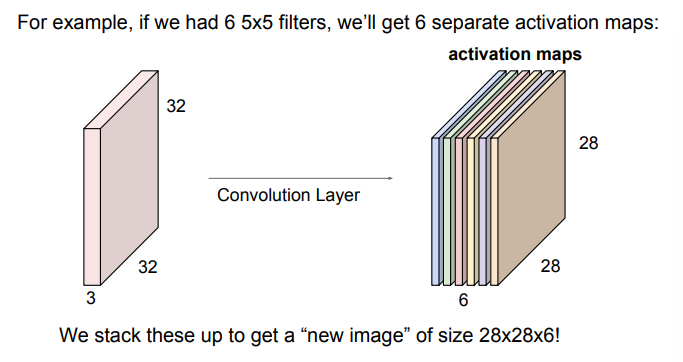

- convolution - apply filters to get features

- Example: A 5×5×3 filter applied to a 32×32×3 input produces a 28×28×1 output (no padding, stride=1).

- Stride and padding

- Stride: Controls how much the filter shifts (reduces output size).

- Padding: Adds zeros to maintain spatial dimensions.

- Pooling (downsampling)

- reduces size while preserving important features (like max pooling - taking max of a certain window (set filter size K and stride S))

architecture

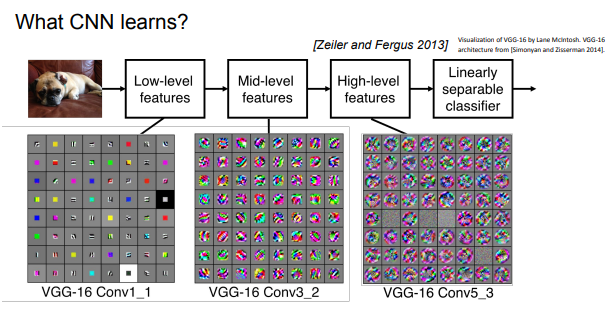

- hierarchical feature learning

- early layers do Edge Detection

- middle layers do corner detection

- deeper layers get semantic meaning

- Example: AlexNet (2012) – First CNN to outperform traditional methods by a large margin.

conv layer needs 4 hyperparams

- num filters (output channels)

- filter size K

- stride S

- zero padding P produces output of

- num params: and biases

- convolve filter with image, computing dot prods - filters always extend full depth of input volume

other

- param configs

- Gradually reduce spatial dimensions while increasing channels to balance computation.

- Example: 224×224×3 → 55×55×48 → 13×13×192.

- Automatically learn hierarchical representations, similar to human vision.

- Replace handcrafted features (e.g., HOG, SIFT) with data-driven filters.

one filter ⇒ one activation map