This has always been the bottleneck for me obtaining a genuine full understanding of ML. It’s so unintuitive to me / 3D space is difficult for me to visualize / all the interchangeable properties and labels make it difficult for me to discern what things actually mean.

resources

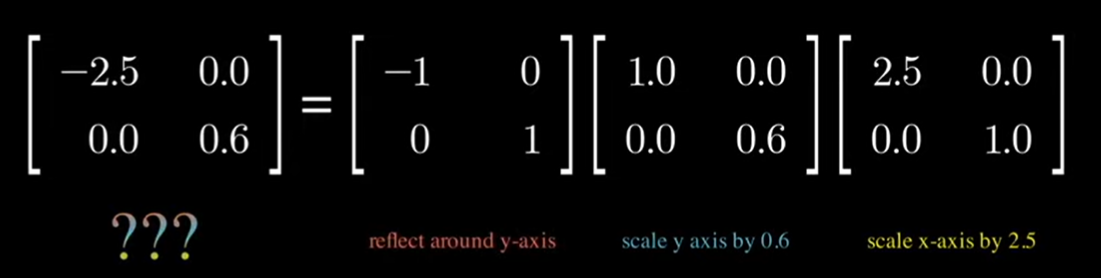

- visualizing transformation matrices , pt 2 → transformation matrix

- ‘Identity off by one’ - scales the specific axis that’s ‘off’

- negative vals flip the image

- matrix multiplication → get one matrix that composes the transformations of the matrices being multiplied

- in square diagonal matrices, they can always be decomposed into a sequence of multiplications

- shear matrix = changes shape into parallelogram along an axis, but preserves area

- orthogonal matrix =

- square

- all column vectors are unit vectors

- all column vectors are orthogonal

- always produces rotation matrix

- subspace

basics

(left off on slide 60 of lin alg review)

- Pixels are represented as vectors

- Images are both a matrix and vector